In the Summer of 2018, UCLA TFT School and REMAP had a three week intensive Summer Institute “Future of Storytelling for Social Impact”, where a multidisciplinary group of students and staff explored using Augmented Reality technology onstage for social impact storytelling.

The production resulted in an interactive theatrical performance, where 9 audience members equipped with AR mobile devices, embark on a 3-act journey to interactively explore the world, adapted from N.K. Jemisin’s “The Fifth Season” novel.

From the onset of the project, the goal was to build a Collaborative AR experience (may also be referred to as social AR, multiplayer AR, etc.).

There are very few good examples of AR multiplayer, which is explainable due to the current state of the technology.

Collaborative AR assumes that different users can see and interact with shared AR elements in same physical locations.

This means, that the AR coordinate system must be unified across multiple devices.

Generally speaking, this is not an easy task to do, though there are attempts to solve this problem by leading AR frameworks.

We did some initial experiments with the ARCore Cloud Anchor API which were satisfactory, but didn’t meet our system requirements:

a) Anchoring speed: it shouldn’t take much time for a user to perform “anchoring” in the physical space; Cloud Anchors on average take about 15-30 seconds to resolve the mappings.

b) Scale: support for 8+ AR devices simultaneously.

c) Easy recovery: AR tracking can be lost, either due to an occasional jerk movement or ill-lit theatrical environment, thus a user needs a clear and simple way to re-anchor the device in space; using Cloud Anchors for recovery requires user to walk back to the anchor location and perform 10-15 second scanning which is a significant distraction from the experience.

In the end, we decided to proceed with a more “old fashioned” approach of using multiple fiducial markers (using ARCore Augmented Images API) at known locations across the physical space.

We modeled stage in 3D and evenly distributed 24 images at known locations, which were painted on the floor of the stage.

Scanning any of the markers, provided device enough information on it’s location within the VR world.

That way, anchored devices are able to observe same scenes and interact with same objects.

Several projectors, installed in the space are used for displaying “background” elements from the 3D world. This, alongside mobile devices, allowed for better immersion into the experience.

The Game World comprised 3D assets of two main classes: “AR Elements” and “Projection Elements”. Asset classes are configurable at runtime, which allowed to selectively enable or disable rendering per device class.

AR Elements are “visible” to AR-capable mobile devices only, i.e. the smartphones carried by the audience members.

Whereas Projection Elements are meant to be rendered only by desktop clients.

These machines run three projectors installed in the space and may output different camera views, cued from a remote control machine.

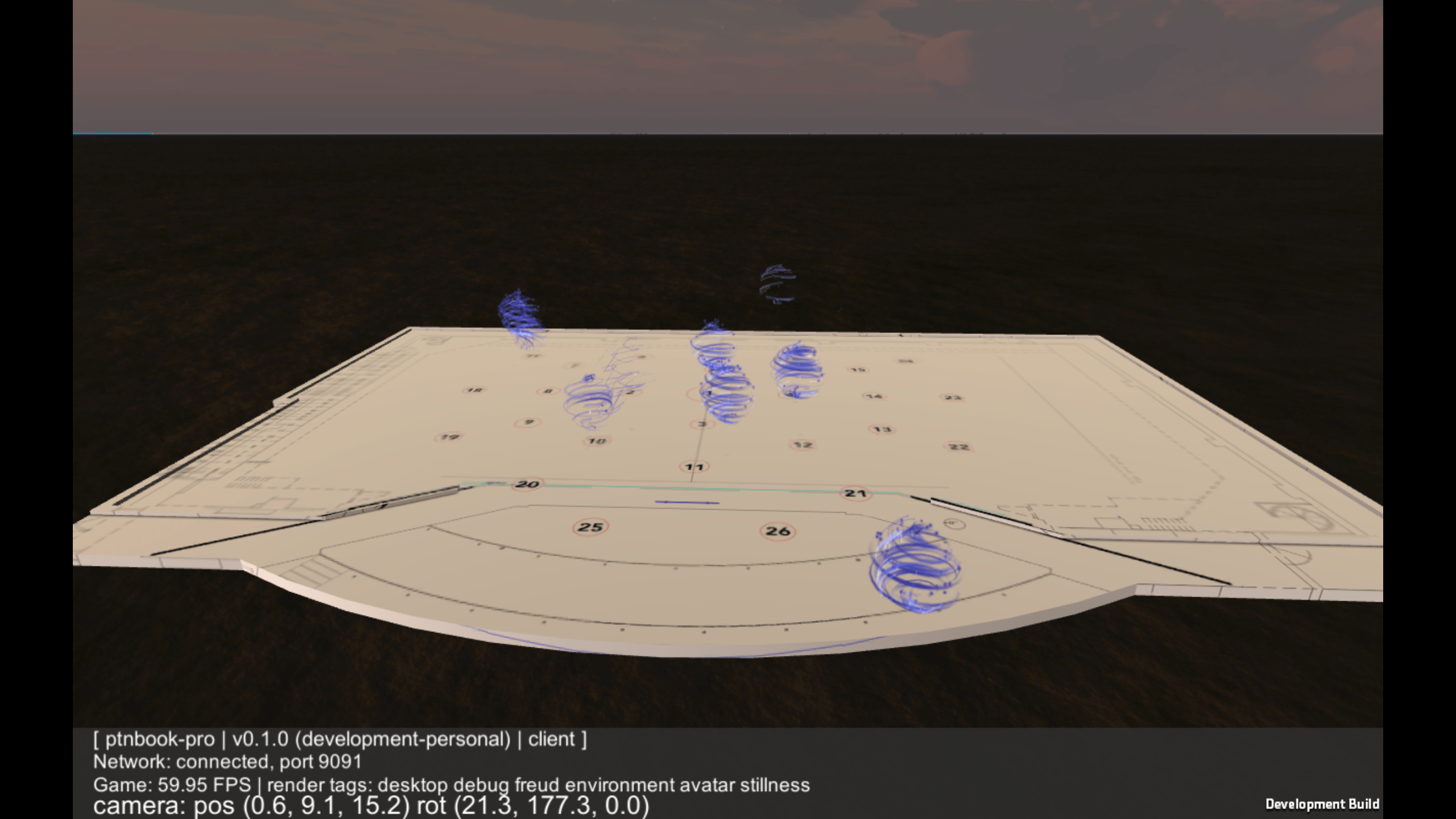

All clients (desktop and mobile) are players of one game world. This allows for unified development approach of the whole system, where each client renders assets and reacts to cues associated with its’ class. Such architecture also allows for having “invisible spectators” and “watchdog” master clients that can observe (and possible interfere with) the current scene in 3D or VR.

Audience members scans fiducial for device “anchoring” →

Photos from one of the live performances →

Mobile screenshot: AR Element – Ashfall →

Our team faced a challenge of integrating our development, testing and code delivery processes into the theatrical production environment. This mostly means acommodating strict rehearsal schedules and limited access to the physical space for testing. The application, simultaneously built by multiple developers and asset designers, must be deployed across 10 smartphones (8 “show” devices and 2 spare ones) and 4 desktop machines.

The application is built using Unity 3D game engine, compiled for mobile as well as desktop clients from the same code base. There is a number of SDKs and Frameworks used underneath, like ARCore, Photon Bolt p2p networking SDK and others.

Designers used their own authoring tools (mostly Maya) for 3D modeling, finalizing models in Unity 3D. To keep track of assets changes alongside the code, a version control system capable of tracking large (hundreds of megabytes) binary files is required.

Perforce is a VCS choice of many game dev companies. Unlike Git, it is specifically designed to track binary files changes efficiently. For the purposes of this project, Perforce is configured with three branches: development, stage and production. The least stable development version of the app is propagated to the stage once its’ feature set is tested and confirmed for the rehearsal. After rehearsal, the decision is made whether rehearsed version will be used during the next show run, in which case code is propagated further to the production version. Development version of the app runs with additional debug output and utilizes different network ports in order to avoid interfering with the rehearsal or show version.

Perforce repository is monitored by Unity Cloud Build service. Any change in either of three branches triggers application builds for mobile and desktop clients.

Build results are reported to the dev teams’ Slack channel as well as to the HockeyApp service. UCB → HockeyApp integration is a custom in-house solution that utilizes webhooks for uploading built Android “.apk” app packages to the HockeyApp. Once the uploaded binary becomes available on the HockeyApp, the report is sent to the Slack channel and mobile devices can be updated to the latest version of the app over-the-air. This significantly simplified and streamlined our deployment process, given we needed to maintain 3 separate application versions on 10 mobile devices.

For desktop clients, however, HockeyApp SDK is not available and deployment was realized by uploading built packages to the Google Drive, which synchronizes across four desktop machines (3 projection nodes and one master/watchdog machine).

A separate “Log” machine is used as a sink for distributed logging, sent from all devices on the network.

Designer workstation, Unity 3D →

Rehearsal runs →

Mobile screenshot: development version during show run →

Desktop screenshot: Master/Watchdog node →

Mobile devices are mounted on staffs for easier carrying by the audience.

Every device is additionally equipped with subwoofer backpack for tactile feedback and a pair of wireless headphones.

More details on the story can be found in Zoe Sandoval’s blog post.